Osirium PAM guide to clustering

This guide is to help you understand clustering and how it works within Osirium PAM.

This section covers:

Introduction

The PAM Cluster feature allows multiple PAM Servers to work together to create a PAM Cluster and can help with and provide:

- Load balancing: ability to spread device connection sessions allowing for smoother connectivity.

- Greater scalability: allowing your Osirium PAM to grow as your demand grows.

- Increased availability and resilience: of resources and minimising loss of time and information during problems and failures.

- Simplified management: all administrative tasks are managed through a centralised node and then replicated across the cluster.

When your PAM Cluster has been configured, a user can log onto any of the PAM Servers to gain access to their privileged device and task list as the data will be replicated across all the nodes in the cluster.

Node identifiers

Nodes are identified by their address which can either be a fully qualified domain name (FQDN) (i.e. clusterleader.companyABC.net) or IP address.

PAM Cluster nodes communicate with each other using their assigned address, therefore the address must be unique to allow a node to resolve the address of other nodes.

If you wish to use FQDNs then the names must resolve to a local address on the node before the installation can continue. All nodes must be able to resolve all FQDNs of all other nodes.

Note

Clusters can have a floating IP address, but only if the nodes are all on the same subnet/layer 2 vlan. A sticky/source persistant Load Balancer should be used to direct traffic across cluster nodes on different subnets.

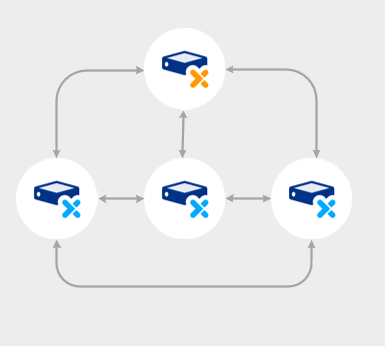

Cluster nodes

Clustered PAM Servers are referred as a PAM Cluster, with each PAM Server referred to as nodes. Each node will be responsible for storing and processing data as well as synchronising data to all other nodes in the cluster.

The first node in a cluster will become the leader node and all subsequent PAM Servers joining the cluster will become follower nodes.

A leader node operating on its own will operate as a standalone PAM Server. To switch to a PAM Cluster setup you will require the appropriate Osirium licence which will allow you to join follower nodes.

Note

Each cluster node retains its own session audit history and session recording data.

Leader node

The first node built will be identified and assigned the cluster leader role. There can only be one leader node within a cluster. The leader node will be assigned elevated privileges and will be the only one to have:

-

The ability to upload the licence file which will be used for the cluster.

-

A writable version of any tables that are marked as shared and which are replicated amongst the nodes. Write privileges allow all nodes to write to the table locally and then replicate the changes (read-only) to all follower nodes.

-

The ability to generate the cluster joining bundle required to join a node to a cluster. The cluster joining bundle is a one time use only and is valid for 24-hours or until the next bundle is generated. The bundle contains a special certificate which is used by the joining node to communicate with the leader and become a trusted node.

-

Privileges to modify the Osirium PAM configuration.

-

Osirium PAM SuperAdmin access level privileges allowing read/write access to the Admin Interface operations and configurations.

Follower node

All nodes joining the cluster after the leader node will be known as a follower node. A follower node will:

-

Share the same master encryption key as the leader node.

-

Share the same licence file as the leader node.

-

Use the SuperAdmin credentials set when the leader node was created to log on.

-

Become a trusted cluster node to all other nodes in the PAM Cluster. Trusted nodes will be allowed to read data from all other nodes to ensure the database is kept synchronised with the cluster and write data to the shared database tables.

-

Delegate any local user password change requests initiated on the user interface or Admin Interface to the leader node to perform.

Cluster sizing guidelines

The size of a PAM Cluster (total number of nodes) can change to meet your requirements.

Below are the key cluster sizing points:

- A single PAM Server on it's own is a standalone node but it is still classed as being in a cluster of 1 node.

- To create a PAM Cluster for resiliency you need a minimum of 2 nodes.

- A cluster should contain a maximum of 7 nodes. This will ensure that the performance of the nodes within the cluster is maintained.

Node communication

To ensure the safe and secure transfer of data and communication between nodes, each node has its own certificate authority which is trusted by every other node. Each node creates a certificate that is used to authenticate it as a member of the cluster, which is then trusted by any other node in the cluster.

These certificates are used to authenticate the:

-

Write-replicate relationship to allow for centrally managed configuration data to be replicated to each follower node from the leader node.

-

Shared write-only replication relationship for sharing configuration and logging data from a follower node to the leader node.

-

Distributed consensus between all the nodes for sharing data and cluster status information.

-

Internode HTTPS communication between nodes.

Node specific configuration

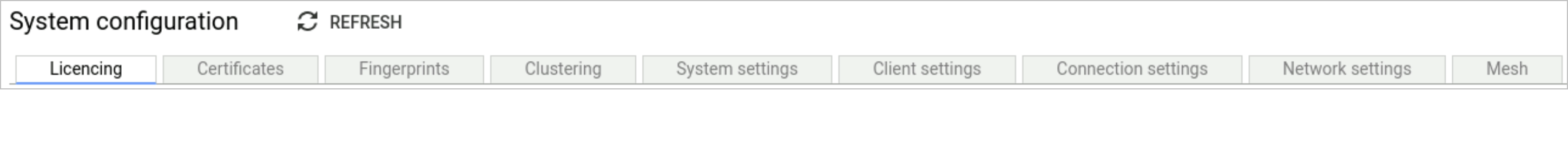

There are a number of device parameters and system configuration settings that will not be synchronised from the leader node. These parameters and configuration settings will have to be configured on a per node basis.

The following outlines the settings which can only be set or updated on the individual node (leader or follower), through its Admin Interface. These settings will not be replicated within the cluster.

-

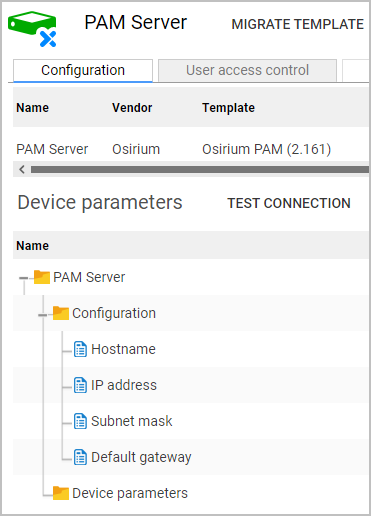

Device parameters: The PAM Server device parameters are those that are configured during the installation process. Changes to the device parameters can be made on the PAM Server > Configuration tab within the Admin Interface.

-

System configuration: The System configuration page is divided into a number of tabs with different configuration settings which can be applied to the node. These configurations are node specific so will have to be configured on a per node basis. The configuration settings will be saved locally in the database and not replicated across nodes or synchronised up to the master database.

There are however a couple of exceptions to note:

-

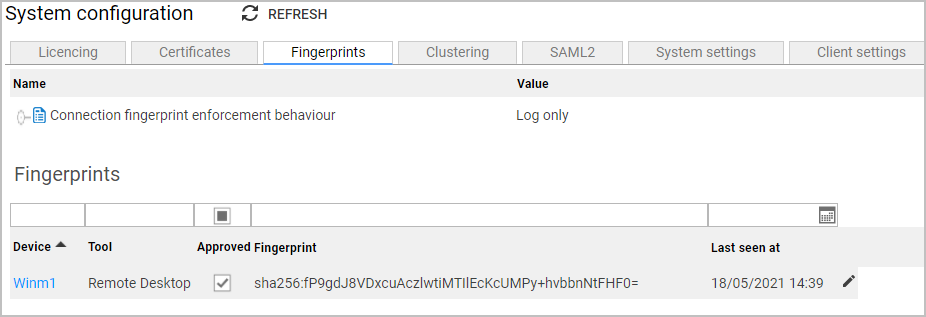

Fingerprints: The approval status of a fingerprint can only be changed on the leader node as the data is replicated. But the Connection fingerprint enforcement behaviour setting is node specific.

-

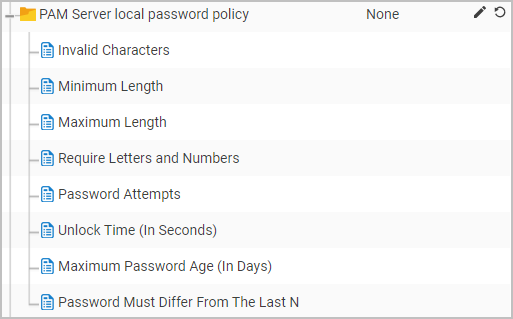

Osirium PAM local password policy: Any local password change requests made from either the user interface or Admin Interface for a user logged onto a follower node will be passed onto the leader node.

Only the password policy configured on the leader node will be used to verify and approve the new password being set.

Any Osirium PAM local password policy configured on a follower node will be ignored.

-

Shared Master Encryption Key

The master encryption key of the leader node is very important. It must be kept safe and stored in your vault as it is required for the following:

-

Joining follower nodes to a cluster. The master encryption key of the leader node is required by all follower nodes to decrypt a known phrase which will enable them to join the cluster.

-

Performing a restore from backup procedure. When you restore a cluster node using an Osirium backup file you will need to enter the master encryption key of the cluster leader to allow the node to decrypt and view the PAM Cluster database.

Cluster data replication

Each node in the cluster will contain its own local database for which tables will have read/write access levels depending on its classified status. The cluster leader will hold the master writable copy of the database. All other nodes will also have a full copy of all the clustered data that is marked as shared and mastered in the database.

Replication of data between the nodes will depend on the database table status which are as follows

-

Mastered: can only be updated on the leader node and then replicated as read-only to all follower nodes. Consists of data configured in the Manage section of the Admin Interface.

-

Shared: every node can write to and read the values written by all other nodes. Read values can’t be edited or deleted by other nodes. Consist of event logging, fingerprint configuration and user data exceptions.

-

Local: are tables that only contain data and configuration that is specific to the node. This data is NOT replicated to other nodes.

Cluster joining bundle

When joining a new node to an existing cluster, a cluster joining bundle is required during the setup and configuration of the new PAM Server.

The cluster joining bundle can only be generated and downloaded from the current leader node. Once generated the bundle will be valid for 24 hours or until the next bundle is generated. Once a bundle has been used to join a node to a cluster it becomes invalidated therefore, each node joining a cluster will require a newly generated bundle from the leader node. Contained within each cluster joining bundle will be:

-

The configured address of the cluster leader node which will be required by the new node to make successful connection to the leader node.

-

A client certificate which will be used by the cluster to identify the connection from the new node and allow the leader node to verify the new node joining the cluster. Once the identification and verification has been successful the certificate is revoked and will become invalid.

-

The public half of the certificate authority to be saved on the new node. This will be required and used by other nodes in the cluster to confirm if the node is a trusted node.

-

The ability to generate a new certificate containing the cryptographic keys signed by the certificate authority on the new node.

Supporting documentation

Further information relating to the Osirium PAM can be found here.